Have you ever looked at your cloud bills at the end of the cycle and probably wondered if you really needed to pay that much, especially for something as basic as storage? Well, that’s all of us.

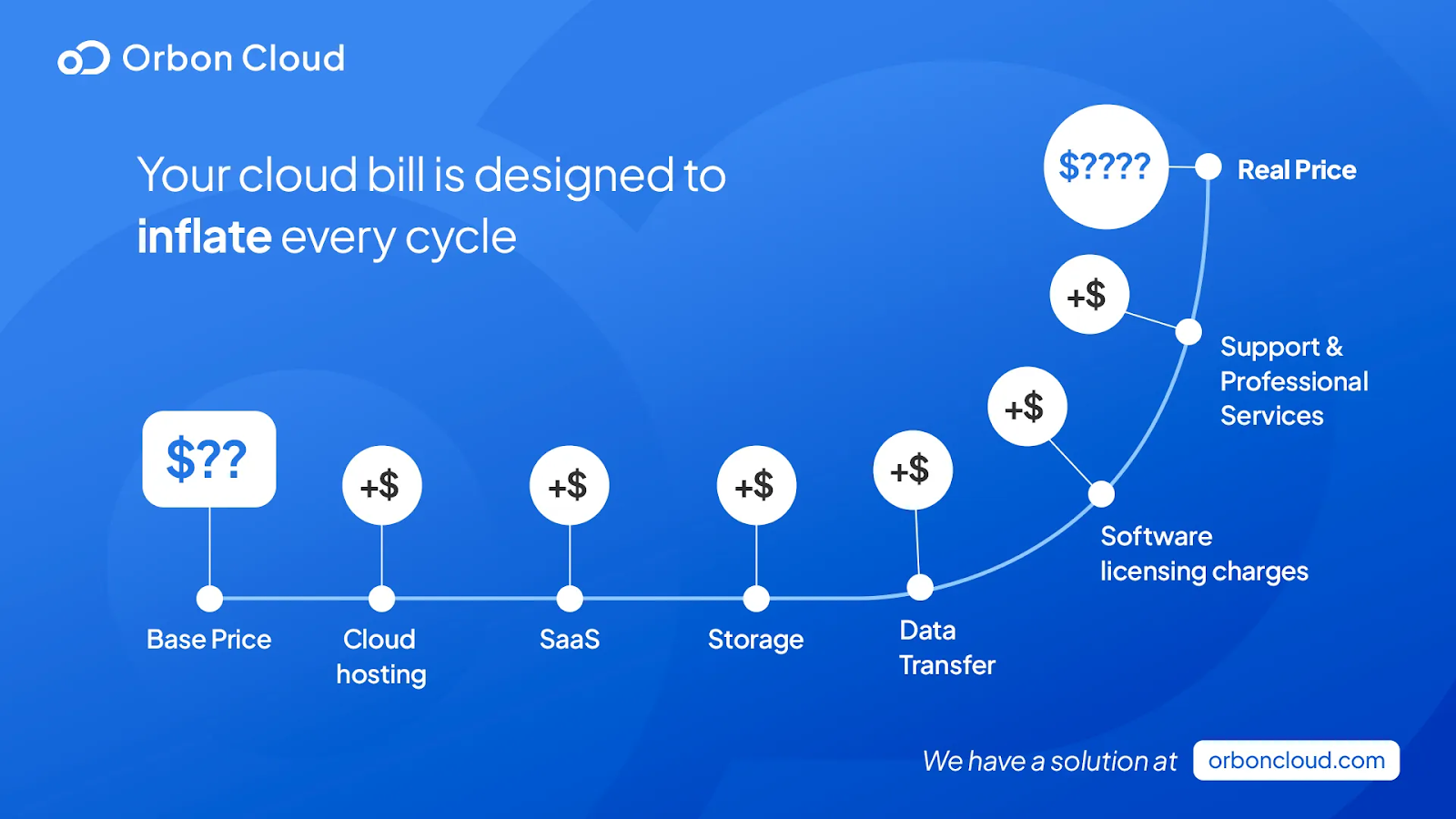

Your cloud bills were built to inflate by design, and there’s a hidden ‘tax’ in the bill for a reason. The hidden tax inside every bill is not a mistake. It is the result of how legacy cloud providers like AWS price infrastructure once real systems are running and scaling. Most teams budget for visible resources: compute hours, storage size, and managed databases. What they underestimate is everything that happens in between.

That gap between what is planned and what is billed grows quietly, and by the time it is noticed, it’s already become recurring. This article explains where that hidden tax comes from, how it accumulates, and ultimately, a solution.

AWS does not price workloads. It prices details.

Each service exposes a narrow unit of value: compute hours, storage size, request volume, and data transferred. That model is internally consistent, but it does not reflect how production systems behave. Applications do not run in isolation. They generate traffic between services, across availability zones, and out to the internet as a side effect of normal operation. Early cost models assume linear growth. In reality, usage grows laterally, and those secondary costs are metered permanently. That is the hidden tax.

But let’s stay on the context of the article: Storage, which has data transfer at the centre of it. Most architectures move far more data than teams expect. Traffic flows between zones for resilience, between services for modularity, and externally for users and integrations. AWS charges for much of this movement. The pricing is visible, but the triggers are subtle. A multi-AZ load balancer, synchronous replication, or a cross-zone service call can all generate transfer charges without any explicit decision to “move data.”

What makes this expensive is not the unit price, but the volume, frequency, and most importantly, the access conditions. Because the charges are fragmented across services, teams rarely notice them until they are embedded in the monthly bills.

The most frustrating line items on any cloud bill are the ones you pay for resources that are dead to you, yet very much alive when your AWS bills come in. In the cloud, “what is dead may never die”.

The most common offender is what we call the "Orphaned Volume". Consider a typical workflow: you spin up an EC2 instance for testing and attach a 500GB storage volume to it. A week later, you terminate the instance. You assume the storage attached to it is deleted, too.

In most cases, it isn't. By default, the root volume usually disappears when the instance is terminated, while additional attached volumes persist. You end up paying a monthly fee for a 500GB hard drive floating in digital space, neither attached to nor yielding anything.

This "tax" extends to IP addresses as well. AWS manages a massive pool of public IP addresses, and to prevent hoarding, it penalizes you for inactivity. An Elastic IP is free when it’s attached to a running instance, but the moment that instance stops, the meter starts running. It is effectively a parking fine for a car you aren't driving.

Then there is the issue of using the wrong cloud service for data backup. We all agree that backups are vital and data hoarding is needed in some cases, but if you don’t do it with a specialized storage utility like Orbon Storage and without the strict governance the service provides, it can become a financial hazard for you. Many teams set up automated scripts to snapshot their databases daily, but fail to implement a cleanup policy. Three years later, they are still paying premium storage rates for hundreds of snapshots of a development server that was decommissioned in 2020.

The "hidden tax" isn't just about accidental waste; it is often embedded in the very architecture of your storage strategy. The most aggressive constituents of those costs in a storage context include: API fees, egress charges, hosting, etc. Which is why strategy is needed in picking the right stack for efficient storage and in the right use case.

The use case we will be focusing on is Big Data storage, especially backup for recovery in events of a disaster. The solution is to decouple your storage from your compute using Orbon Storage. By treating storage, most especially data backups, as an independent, utility-based resource, you eliminate the variable costs that make AWS bills unpredictable. This doesn’t mean you are dumping AWS; it just means you are adding a specialized utility to cater to your storage needs, cost-efficiently.

As already mentioned, this is not a call to replace your existing AWS workflow. In fact, this strategy complements your existing architecture. The most powerful financial optimization for your storage needs would probably involve leveraging the strong parts of AWS’s pricing rules with Orbon Cloud’s to create a zero-cost recovery loop.

Since:

and…

This creates a perfect system for off-site backups and Big Data archives. You can replicate your AWS data to Orbon storage for safekeeping. If disaster strikes and you need to restore your data to AWS, it costs you exactly $0; Orbon Cloud charges $0 to send it, and AWS charges $0 to receive it.

Compare this to storing backups in AWS S3. While the storage fee is standard, the "tax" lies in the recovery. If you need to restore data quickly, you could be hit with massive egress charges, effectively holding your own data ransom during a crisis.

Let's look at a scenario where you are storing 50TB of critical backup data. A corruption event occurs, and you need to restore that data immediately to your AWS environment for recovery.

Scenario 1: You only stored this data in AWS S3

Making the cost for this 50TB scenario around $5,650 for a single recovery event. And this is excluding any other charges for the likes of API calls and replication.

Scenario 2: You backed up this data in Orbon Storage

Making the total cost for this scenario only the standard storage subscription of $1,000, for the same recovery event.

The Result: In this scenario, you have reduced the cost of this recovery event by 82%, using Orbon Storage!

It’s also not just about the cheaper storage rate; it’s about predictability and eliminating billing volatility. Using AWS for your disaster recovery, your bill spikes when you are most vulnerable. But with Orbon Cloud, your bill remains flat.

The AWS cloud is incredibly powerful and has its strong use cases, especially in services such as compute, but its default settings prioritize availability and convenience over cost optimization, making it non-ideal for more baseline tasks like storage. The "hidden tax" that its storage services can incur could be the difference between a CTO's hefty cloud bill and a moderate one.

You do not have to accept variable bills for a basic task like storage. By understanding the granular "taxes" on data storage and the fees on transfer, like egress and API fees, and instead, leveraging specialized utilities like Orbon Storage to eliminate those punitive fees for the same function, you can turn your cloud operations from a volatile cash burn into a fixed, predictable spend.

Don't wait for the next angry message from Finance. Audit your resources today, and stop paying tolls to access your own data.

Let Orbon Cloud help with that today.